Using AgentOps with LiteLLM

Initialize AgentOps and use LiteLLM in your code

Run your Agent

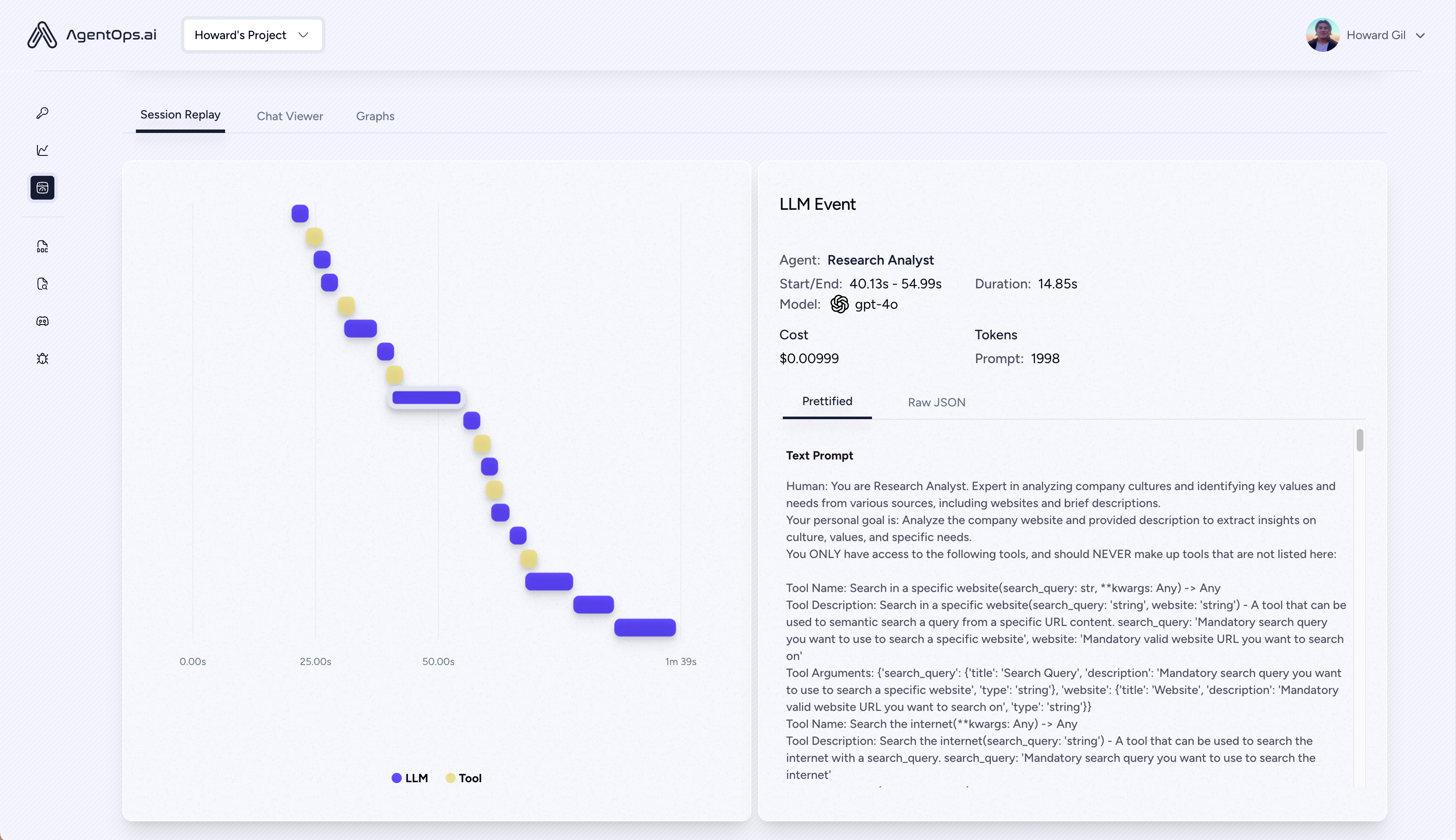

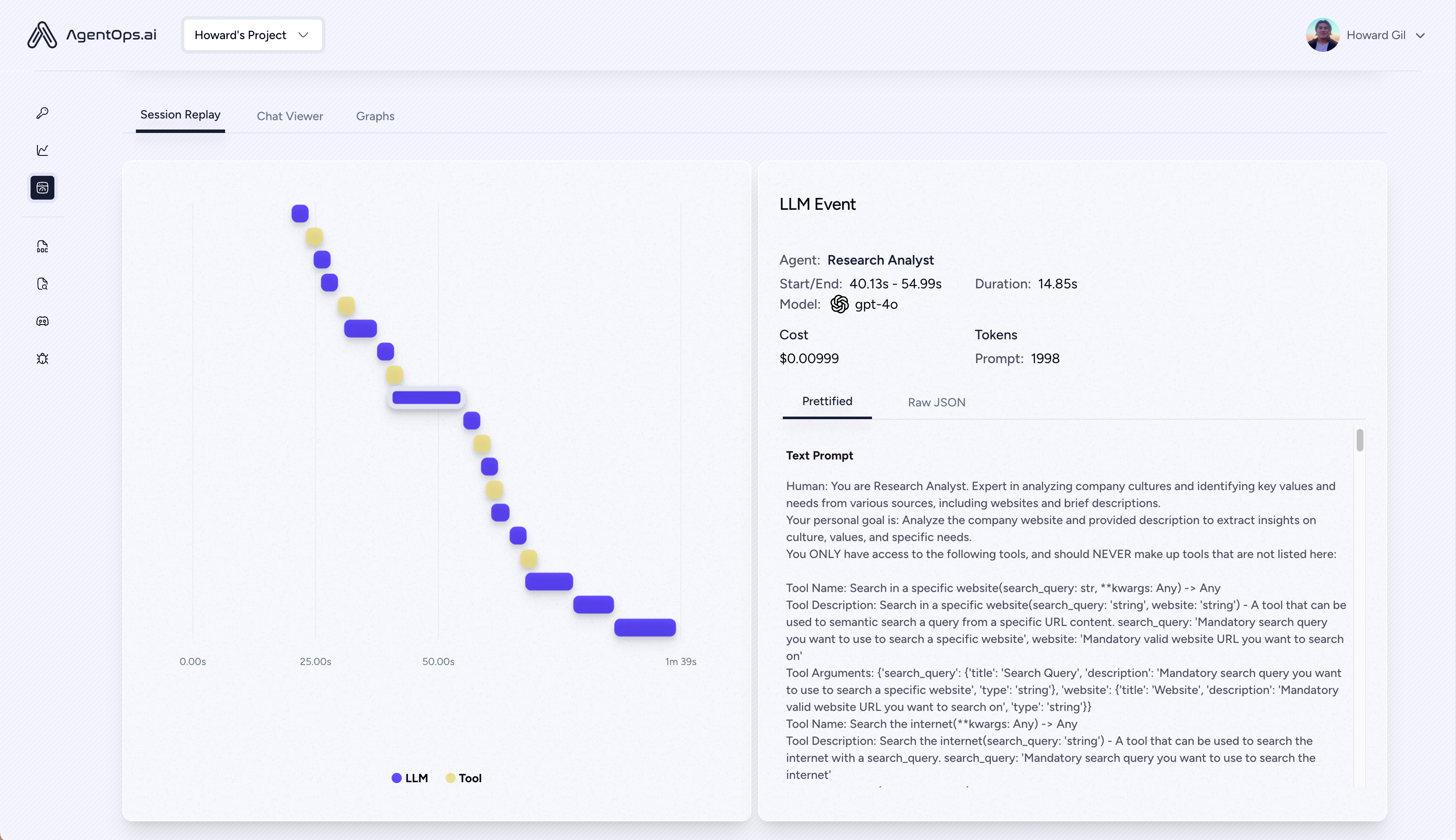

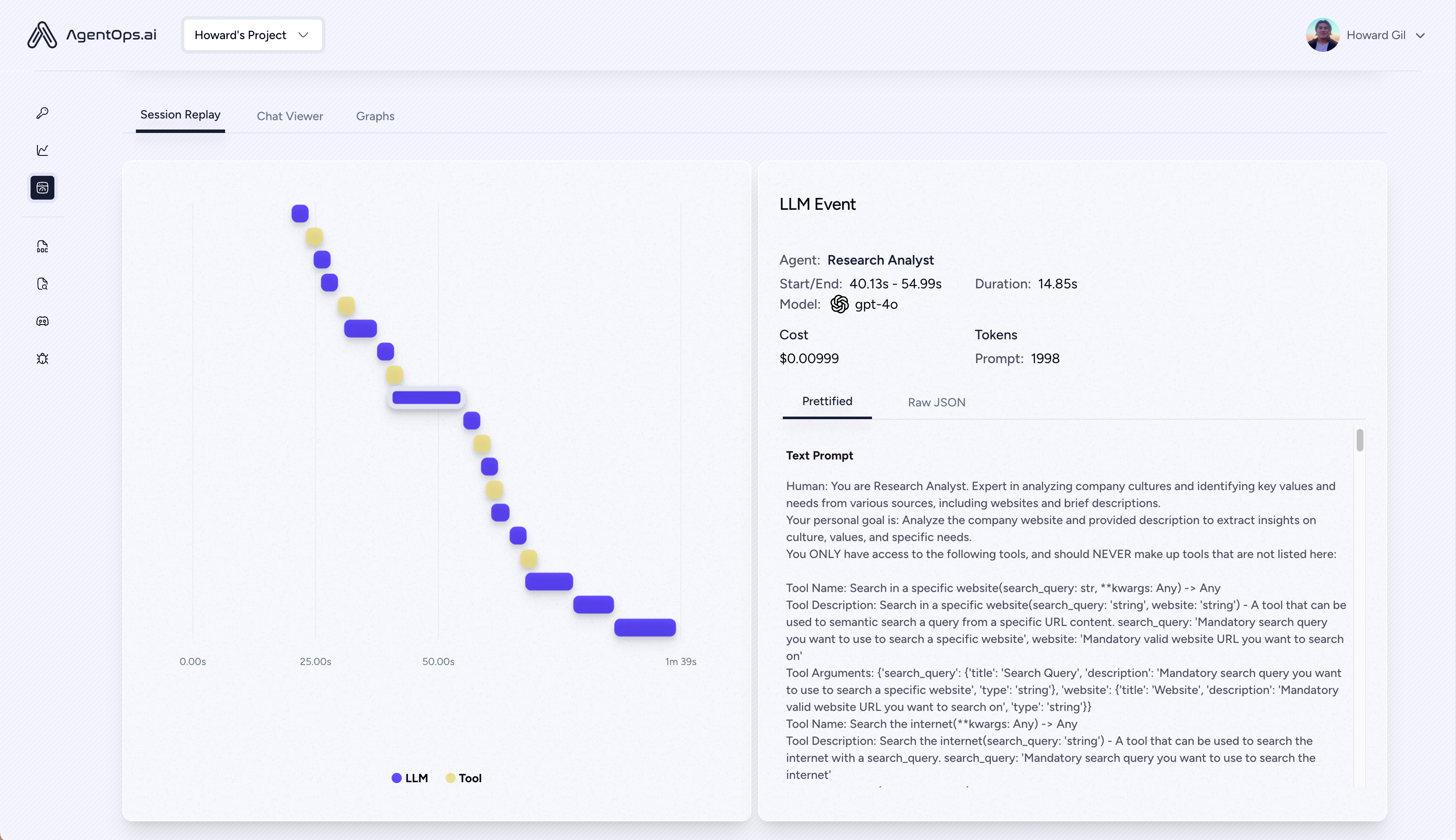

Execute your program and visit app.agentops.ai/drilldown to observe your Agent! 🕵️

Call the latest models using the OpenAI format including: Llama, Mistral, Claude, Gemini, Gemma, DALL-E, Whisper

Initialize AgentOps and use LiteLLM in your code

Run your Agent